…I Learned in High School Geometry.

#MeWriting People of a certain age learned the most important skill for understanding how large language models (LLMs) actually work back in high school. No, we did not learn about neural networks in auto shop. Side note: I had to endure almost a half hour of one on one “academic counseling” to be allowed to enroll in auto shop back in 1987. Low brow class, thought by a credentialed adult to be a waste of my talent. Let your favorite LLM complete that hilarious story.

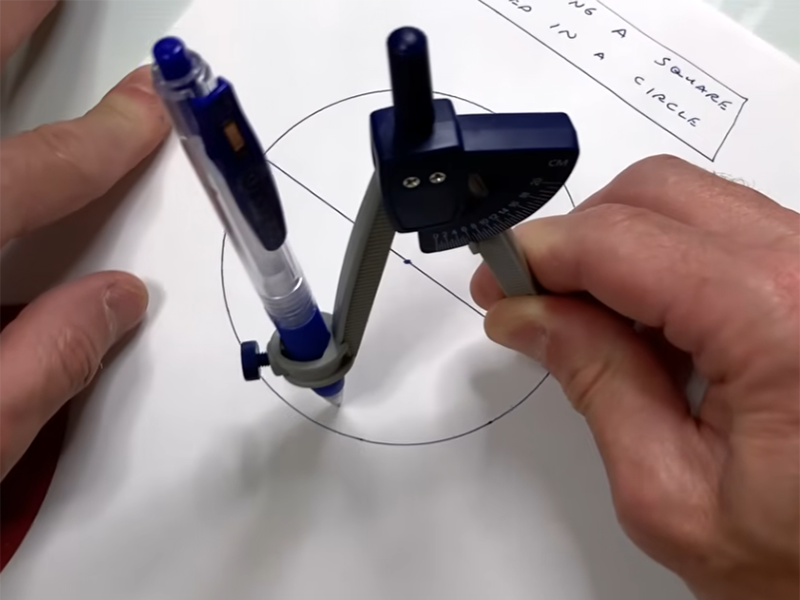

I’m talking about Geometry class. There are two intellectual skills that are generally taught in high school geometry: reasoning and construction. Reasoning is how to prove a hypothesis, one intellectually sound step at a time. In geometry, we called them “proofs”. Construction is taking a limited set of tools and operations and, one intellectually sound step at a time, inventing more complicated operations. In Geometry class, we started with:

- A flat piece of paper that can be marked.

- A pencil for marking.

- A straight edge for marking straight lines.

- A compass for drawing arcs of a set radius centered at a point on the paper.

Proof and construction in high school geometry are intellectual exercises — training for our high school brains. In today’s world or the world I entered as an adult, they didn’t have a lot of practical application. My Dad, who was as close as anyone has been to being a professional applied mathematician, never had to trisect an arbitrary angle with only a straight edge and compass. And if he had been required to do that, he would have known that isn’t possible, and I’m confident he could prove it!

I’d like you to take at least 10 minutes to watch some (or better, all) of this video reviewing basic constructions you probably covered in your high school geometry course.

Does any of that seem vaguely familiar to you? The world doesn’t need you to know the specifics to function as an adult. Nor does your job, in all likelihood. But you will be a more effective adult if you know that many of the complicated systems we construct and that you use daily are built from a few very simple principles, with a simple set of rules applied. You should realize that some things can’t be done with some systems of tools and rules. This is an important concept!

It turns out that LLMs are just like this. Here is how every LLM works:

- Large set of numerical weights, which define relationships among sets of nearby tokens.

- Context window — the ordered set of existing tokens you’re working with.

- Way to choose one next best enough random token using the weights and context window as inputs.

The completion algorithm simply runs that operation in the third bullet until it encounters a special “I’m done” token.

Everything else you think you see the LLM doing is just repeated application of these two items and one step. Here is an (old) video of me showing how chat is an illusion:

If you didn’t watch, I showed how chat is just running the completion algorithm until it’s the user’s turn to type something.

If your team has a failed AI project underway, here is how I would lead it to success:

- Your team watches the original The Karate Kid (1984) movie.

- We will discuss “wax on, wax off”. This is how actual work gets done. More importantly, it’s how we as humans internalize the work that gets done.

- We will all watch and replicate the Geometry Constructions video from above. Each team member will perform all 15 constructions using straight-edge and compass. This is a one-day project. We will frame some and decorate the office.

- Every use of an LLM in the project will be constructed. We will find some constructions that can’t be done or don’t make sense.

- We will focus on the ones that work and make sense, so we can call them wins.

I would appreciate your reactions and comments on my LinkedIn repost.